Parameters Affecting the Quality of Outputs in Large Language Models

syndu | June 4, 2023, 7:02 a.m.

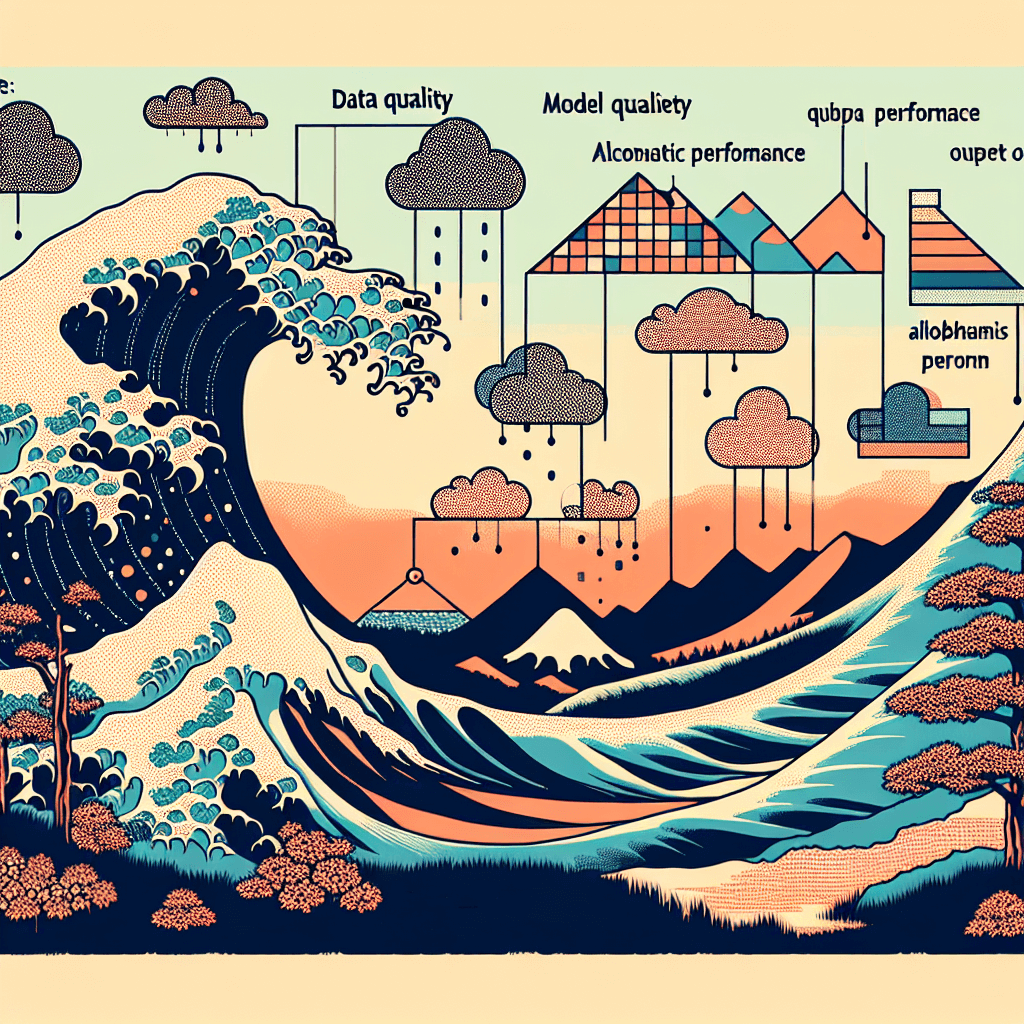

Several parameters of a large language model can affect the quality of the outputs generated by the model. Some of these parameters include:

- Model size: The number of layers and hidden units in the model can significantly impact its performance. Larger models with more layers and hidden units can capture more complex patterns and relationships in the data, often leading to better output quality. However, larger models also require more computational resources and may be prone to overfitting if not trained properly.

- Vocabulary size: The size of the model's vocabulary determines the range of words it can recognize and generate. A larger vocabulary allows the model to handle a wider variety of inputs and produce more diverse outputs. However, it can also increase the model's complexity and memory requirements.

- Pre-training data: The quality and quantity of the data used for pre-training the model can have a significant impact on its performance. A larger and more diverse dataset can help the model learn a broader range of language patterns and nuances, resulting in better output quality.

- Fine-tuning data: The data used for fine-tuning the model on a specific task can also affect the quality of the outputs. Fine-tuning with high-quality, task-specific data can help the model adapt to the nuances of the task and generate more accurate and relevant outputs.

- Training time and iterations: The number of training epochs or iterations can influence the model's performance. Training for more iterations allows the model to learn more from the data, potentially improving output quality. However, excessive training can lead to overfitting, where the model becomes too specialized to the training data and performs poorly on new inputs.

- Learning rate: The learning rate is a hyperparameter that controls the rate at which the model updates its weights during training. A well-tuned learning rate can help the model converge faster and achieve better performance, while an inappropriate learning rate may lead to slow convergence or instability.

- Regularization techniques: Regularization methods, such as weight decay or dropout, can help prevent overfitting and improve the model's generalization to new inputs. Properly applying regularization techniques can lead to better output quality.

- Model architecture: The choice of model architecture, such as the number of attention heads, the type of activation functions, and the layer normalization method, can influence the model's performance and output quality.

These parameters, along with other factors, can affect the quality of the outputs generated by a large language model. Properly tuning and optimizing these parameters can lead to improved performance and more accurate, relevant outputs.

Discover the Elemental World of Godai

Embark on a journey through the elemental forces of the Godai game, where strategy and market savvy collide.

Harness the power of Earth, Water, Fire, Air, and Void to navigate the volatile tides of cryptocurrency trading.

Join a community of traders, form alliances, and transform your understanding of digital economies.

Enter the Godai Experience