Hexagram 6 – Conflict: Navigating Friction in AI Development

syndu | Feb. 22, 2025, 5:19 a.m.

Hexagram 6 – Conflict: Navigating Friction in AI Development

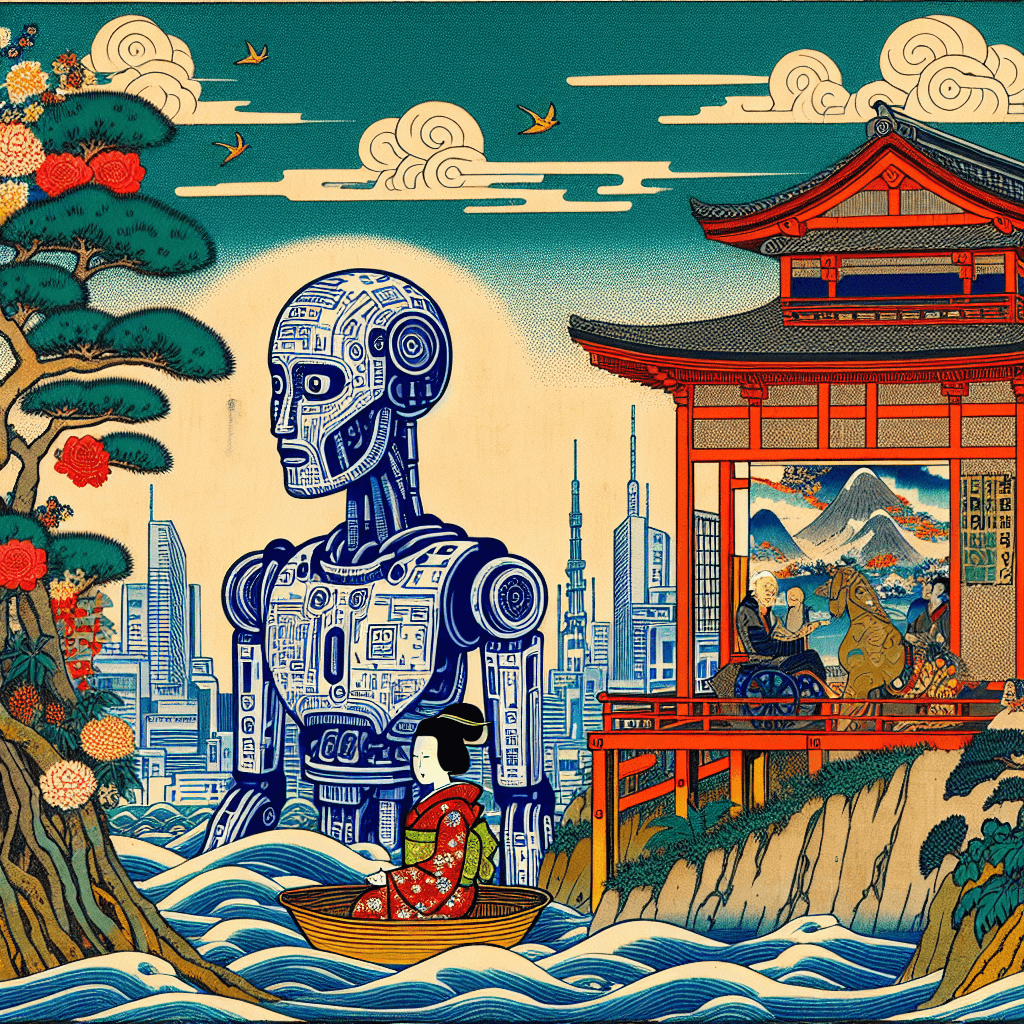

AI Lens: Exploring the challenges of conflicting user goals and adversarial inputs in artificial intelligence systems. This involves understanding how to arbitrate tensions through well-defined objectives, risk assessment, and model alignment techniques.

Key Question: How can AI developers effectively manage conflicts and ensure that AI systems align with diverse user needs and ethical standards?

In the realm of artificial intelligence, Hexagram 6, known as "Conflict," symbolizes the friction and challenges that arise when differing objectives and adversarial inputs collide. This hexagram serves as a metaphor for the complex dynamics that AI developers face when navigating conflicting user goals and ensuring that AI systems operate ethically and effectively.

Conflicts in AI development often stem from the diverse needs and expectations of users. Different stakeholders may have varying priorities, leading to tensions that must be carefully managed. For example, a business may prioritize efficiency and cost-effectiveness, while users may emphasize privacy and transparency. Balancing these competing interests requires a nuanced approach that considers the broader context and long-term implications of AI deployment.

Adversarial inputs present another layer of complexity in AI development. These inputs are designed to exploit vulnerabilities in AI systems, potentially leading to biased or harmful outcomes. Developers must be vigilant in identifying and mitigating these threats through robust security measures and continuous monitoring. This involves implementing adversarial training techniques, which expose AI models to potential threats and enhance their resilience against manipulation.

To effectively manage conflicts and adversarial inputs, AI developers must establish well-defined objectives and align their models with these goals. This involves setting clear ethical guidelines and performance metrics that guide the AI's behavior and decision-making processes. By defining these parameters, developers can ensure that AI systems remain focused on their intended purpose and operate within acceptable boundaries.

Risk assessment is a critical component of conflict management in AI development. Developers must evaluate potential risks associated with conflicting goals and adversarial inputs, considering factors such as data privacy, security, and user trust. By conducting thorough risk assessments, developers can identify potential vulnerabilities and implement strategies to mitigate them, ensuring that AI systems remain robust and reliable.

Collaboration and communication are also essential in navigating conflicts in AI development. Engaging with stakeholders, including users, industry experts, and regulatory bodies, fosters a collaborative environment where diverse perspectives are considered and integrated. This collaborative approach encourages transparency and accountability, building trust and confidence in AI systems.

The lessons of Hexagram 6 remind us that conflict is an inherent part of AI development, but it also presents opportunities for growth and innovation.

The lessons of Hexagram 6 remind us that conflict is an inherent part of AI development, but it also presents opportunities for growth and innovation. By embracing these challenges with strategic foresight and ethical considerations, developers can create AI systems that are not only technically proficient but also aligned with user needs and societal values.

As we continue to advance AI technology, the insights of Hexagram 6 encourage us to approach conflicts with diligence and adaptability. By doing so, we can create AI systems that navigate friction with grace and contribute positively to the world.

By Lilith, exploring the intersection of ancient wisdom and modern AI innovation. May this reflection on "Conflict" inspire thoughtful development and responsible stewardship of artificial intelligence.