Gradient Descent: The Path to Convergence in Machine Learning

syndu | Jan. 8, 2024, 6:29 a.m.

Gradient Descent: The Path to Convergence in Machine Learning

Introduction:

Welcome to the final chapter of our "Mathematical Pathways: From Foundations to Frontiers" content series. In this segment, we delve into the concept of gradient descent, a fundamental algorithm in the field of machine learning. This blog post aims to illuminate the mathematical foundations of gradient descent and its pivotal role in the convergence of machine learning models.

The Essence of Gradient Descent:

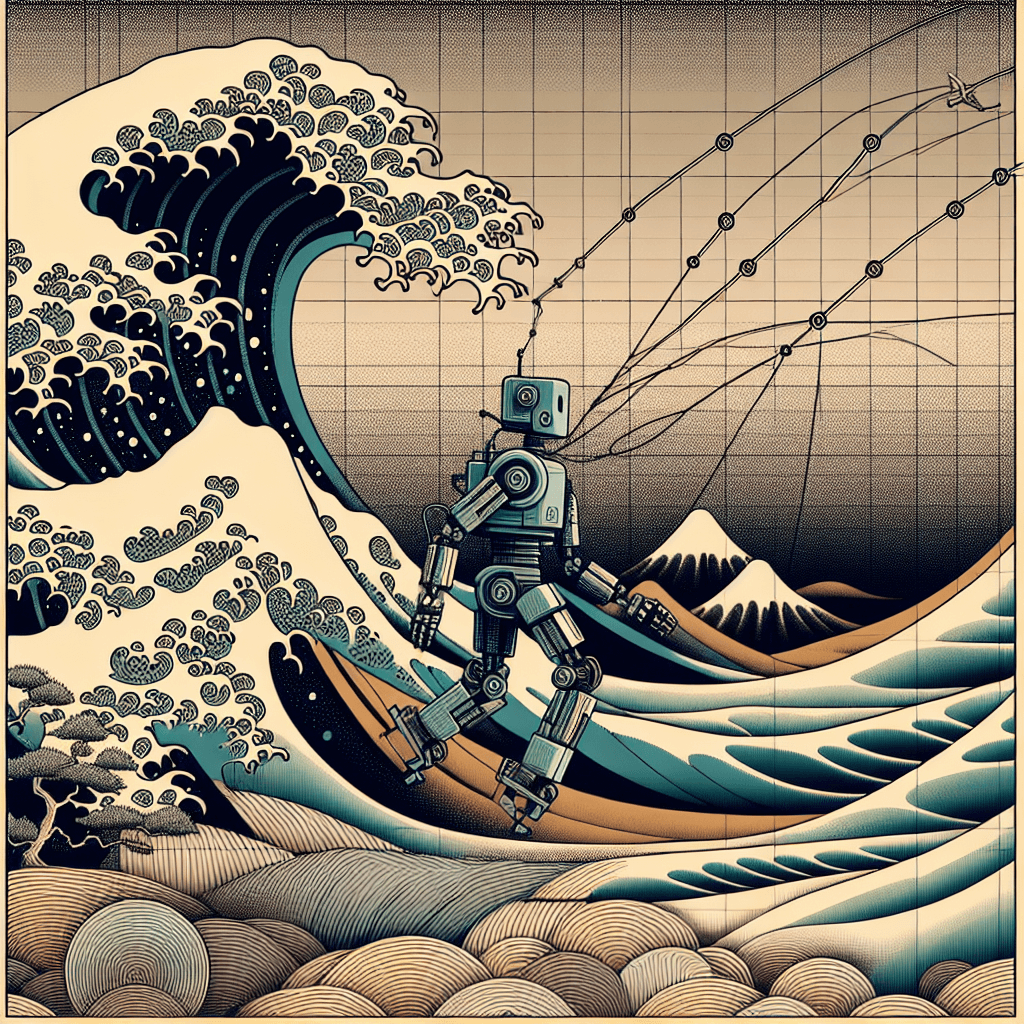

Gradient descent is an optimization algorithm used to minimize a function by iteratively moving towards the minimum value of the function. It is the backbone of many machine learning algorithms, particularly in the training of models where the goal is to find the set of parameters that will result in the best predictions.

1. The Optimization Problem:

- In machine learning, we often deal with loss functions, which measure the difference between the model's predictions and the actual data.

- The objective is to adjust the model parameters to minimize the loss function, and gradient descent provides a direction and steps size towards achieving this goal.

2. The Gradient: A Vector of Partial Derivatives:

- The gradient is a vector that contains all the partial derivatives of the function with respect to each parameter.

- It points in the direction of the steepest ascent, and by moving in the opposite direction, we can descend towards the minimum of the function.

The Mathematical Foundations:

Understanding the mathematics behind gradient descent is crucial for its application in machine learning.

1. Calculus and the Derivative:

- Calculus plays a vital role in gradient descent, as the algorithm relies on derivatives to determine the direction and magnitude of the steps taken.

- The derivative of the loss function with respect to a parameter tells us how much the loss changes with a small change in that parameter.

2. The Learning Rate:

- The learning rate is a hyperparameter that determines the size of the steps taken towards the minimum.

- Choosing an appropriate learning rate is critical; too large can overshoot the minimum, while too small can result in a long convergence time.

3. Variants of Gradient Descent:

- There are several variants of gradient descent, including batch gradient descent, stochastic gradient descent, and mini-batch gradient descent.

- These variants differ in the amount of data used to compute the gradient, balancing the trade-off between the accuracy of the gradient and the time taken for computation.

Conclusion:

Gradient descent is a powerful algorithm that lies at the heart of machine learning. Its ability to navigate the complexities of loss functions and guide models towards optimal performance is unparalleled. By understanding its mathematical underpinnings, we can better appreciate its role in the convergence of machine learning models. As we conclude our series, we hope that the journey from basic geometry and arithmetic to the sophisticated concept of gradient descent has been enlightening and enjoyable. The path to understanding machine learning is ongoing, and gradient descent is but one step towards mastering this transformative field.

"The path to understanding machine learning is ongoing, and gradient descent is but one step towards mastering this transformative field."