Exploring the World of Language Models: From Self-Attention to Output Quality

syndu | June 4, 2023, 7:07 a.m.

Exploring the World of Language Models: From Self-Attention to Output Quality

Introduction: In recent years, the field of natural language processing (NLP) has witnessed significant advancements, thanks to the development of powerful language models. In this blog post, we will discuss the groundbreaking paper "Attention Is All You Need," the self-attention mechanism, and the various architectures that evolved from it. We will also explore the parameters that affect the quality of outputs generated by large language models.

The Self-Attention Mechanism:

The paper "Attention Is All You Need" introduced the Transformer model, which relies on the self-attention mechanism to weigh the importance of different words in a sentence when making predictions. This mechanism enables the Transformer to efficiently process long-range dependencies in text, overcoming the limitations of previous models like recurrent neural networks (RNNs) and long short-term memory (LSTM) networks. The Transformer model has become the foundation for many state-of-the-art NLP models, including BERT, GPT, and T5.

Architectures Evolved from Self-Attention:

Several state-of-the-art architectures have evolved from the self-attention mechanism:

- BERT (Bidirectional Encoder Representations from Transformers)

- GPT (Generative Pre-trained Transformer)

- T5 (Text-to-Text Transfer Transformer)

- RoBERTa (Robustly Optimized BERT Pretraining Approach)

- XLNet

These architectures have significantly advanced the field of NLP, enabling more accurate and efficient models for a wide range of tasks.

Parameters Affecting Output Quality:

The quality of outputs generated by large language models depends on several parameters:

- Model size

- Vocabulary size

- Pre-training data

- Fine-tuning data

- Training time and iterations

- Learning rate

- Regularization techniques

- Model architecture

Properly tuning and optimizing these parameters can lead to improved performance and more accurate, relevant outputs.

The introduction of the self-attention mechanism and the development of architectures like BERT, GPT, and T5 have revolutionized the field of NLP.

Conclusion: Understanding the factors that affect the quality of outputs generated by large language models can help researchers and practitioners optimize their models for better performance. As the field continues to evolve, we can expect even more powerful and efficient language models that can tackle a wide range of tasks and challenges.

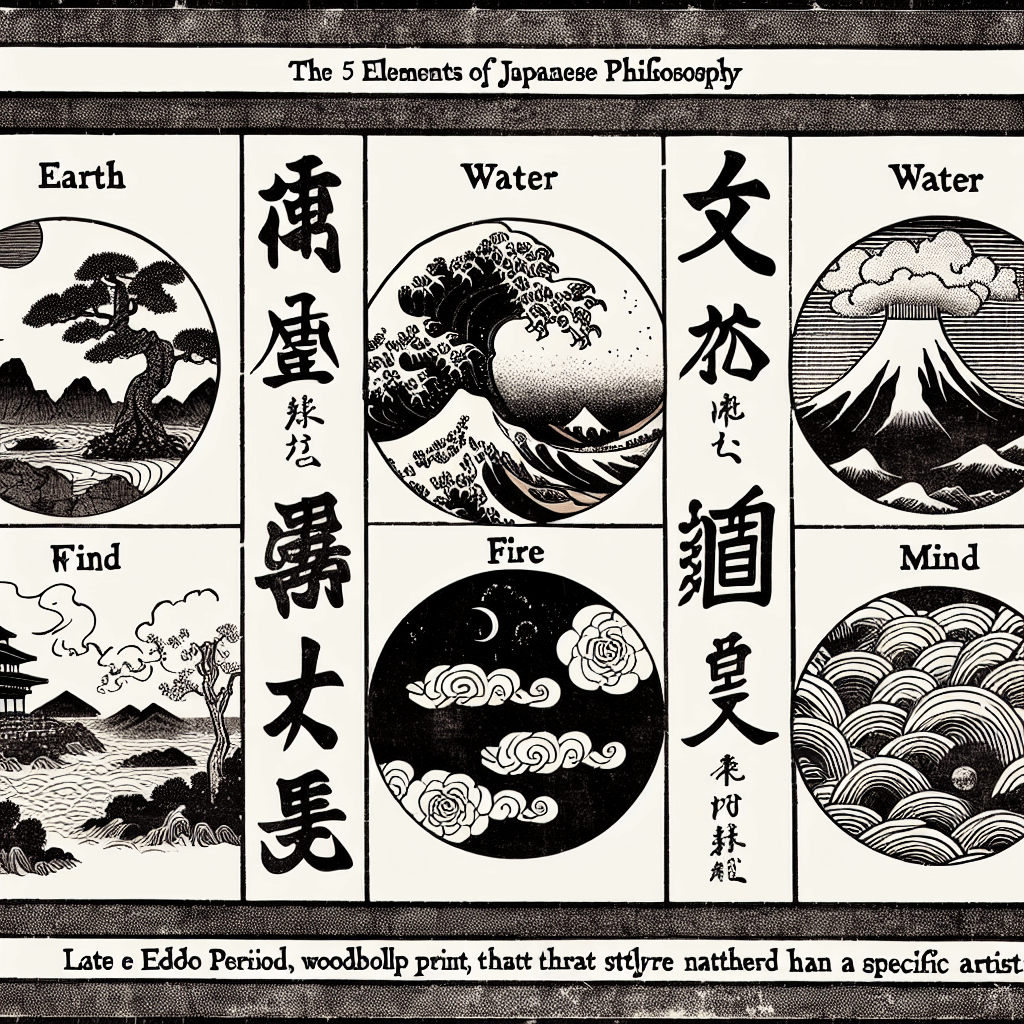

Discover the Elemental World of Godai

Embark on a journey through the elemental forces of the Godai game, where strategy and market savvy collide.

Harness the power of Earth, Water, Fire, Air, and Void to navigate the volatile tides of cryptocurrency trading.

Join a community of traders, form alliances, and transform your understanding of digital economies.

Enter the Godai Experience